The first shot is one with a well exposed sky & clouds, but underexposed ground:

The next shot has a well exposed ground, but an over exposed sky:

My final result (with a bit of saturation enhancement, which I was just too lazy to code in at the moment):

For those of you who may be interested, I'll even tell you the secret of my marvelous invention! It's late and Will just went back to sleep, so I don't have time to clean up the jargon though.

I started with the over exposed image, and at a certain brightness threshold, began to blend in a part of the underexposed image. So when the clouds started to get "too bright" I'd blend in a bit of the other image. Perhaps the idea was flawed, or I shouldn't have stayed in the RGB colorspace, but I ended up with a funny result:

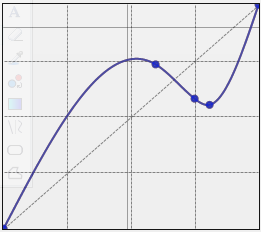

Ewww! It was pretty obvious there was a bug. One theory: I was just blending in the RGB values of the other image and producing a non-invertable response curve like the one below.

I played with a few threshold values... and when I removed the threshold and started blending immediately, I got a good result. So instead of waiting until part of the image was REALLY bright, I started blending the two immediately, with more blending the brighter the image got.

I was a bit disappointed when I looked over my code and realized that I hadn't really created a true HDR imaging program. My algorithm actually translates into a simple alpha mask (a grayscale negative of the over exposed image used as a transparency mask for the underexposed image does exactly the same thing).

I may clean up the code and post the program on blogger later (or host it on my FTP server if blogger won't let me post it).

No comments:

Post a Comment